Introduction

Microsoft Fabric is a comprehensive analytics platform that provides a set of integrated services to ingest, store, process and analyze data in a single environment:

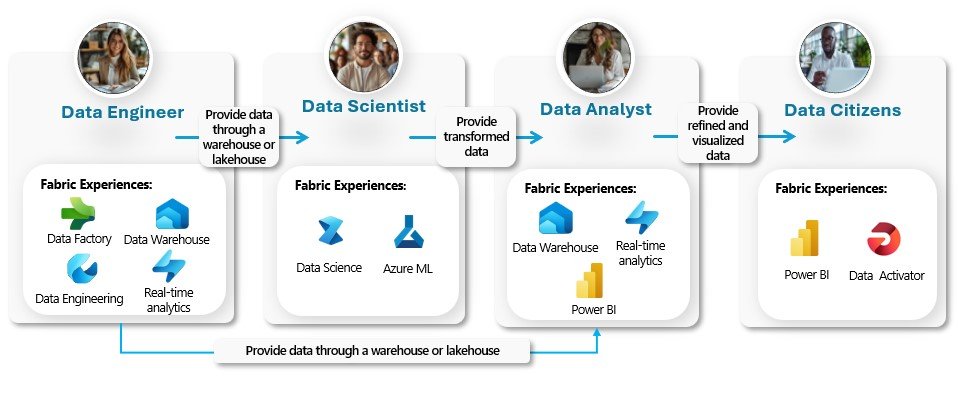

It’s also an experience-based platform, which means that users can interact with it based on their roles, needs and knowledge. Fabric focuses primarily on four types of personas:

- Data Engineer

- Data Scientist

- Data Analyst

- Data Citizen

Fabric offers different paths for each persona, enabling end-to-end analytical solutions depending on the expertise of each role. Fabric is integrated into Microsoft 365, and much of the user experience is based on the Power BI platform, making it easier for data analysts to adopt.

Therefore, Fabric has the potential to significantly expand the scope of your profession, allowing you to cover all phases of a BI solution with a much shorter learning curve. Before Fabric, it was necessary to learn each technology separately, and the specialization of each role was much more defined.

In this series of articles, I will show you how to develop an end-to-end solution with Fabric, leveraging most of your Power BI knowledge. You will see the equivalencies between Fabric and Power BI, and I will guide you through the new concepts and skills you need to take your career as a data analyst to the next level.

Problems that Microsoft Fabric Solves

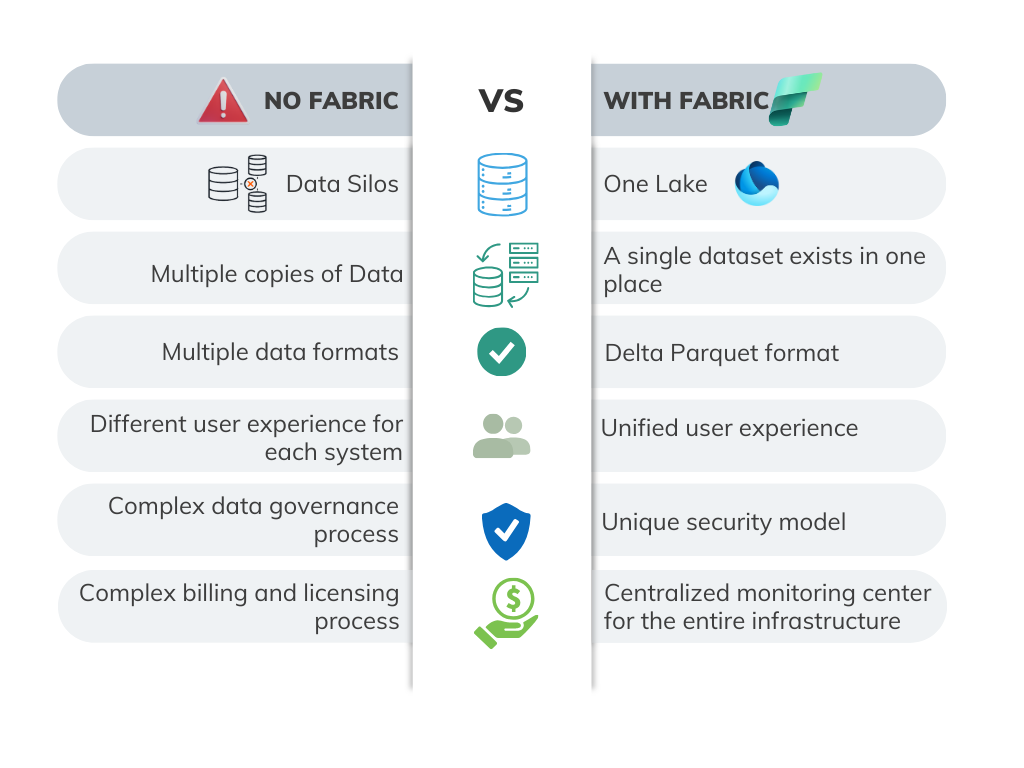

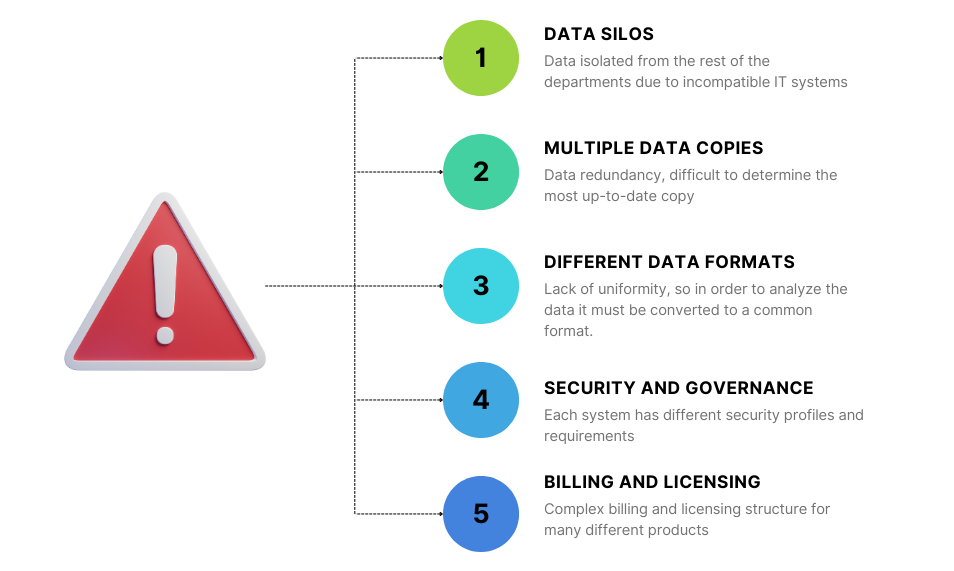

Fabric emerged from the complexity that companies faced in managing their data, where each department handled different products from multiple providers. This diversity made integration between them complex, fragile, and costly, presenting the following challenges for organizations:

Fabric has been designed to address all the aforementioned problems. It is a new conception and a complete re-architecture of data management within an organization:

Fabric is a unified analytics solution for data integration, data engineering, real-time analytics, data science and BI needs with comprehensive security and governance.

Key Concepts

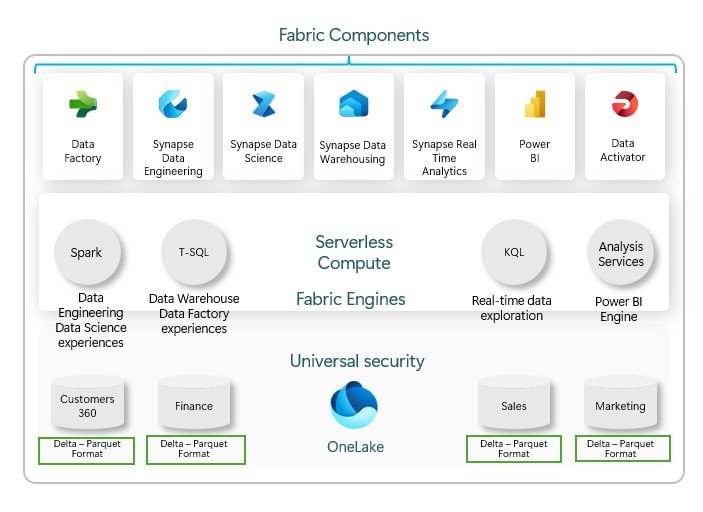

Fabric Components

Fabric offers the following set of seven fully integrated analytics experiences that enable end-to-end data management and analysis, tailored to a specific person and task:

- Data Factory: Integrate and transform data from various sources using over 200 connectors and Power Query.

- Synapse Data Engineering: A Spark platform for managing and optimizing data processing infrastructures, integrated with Data Factory.

- Synapse Data Science: Build and deploy machine learning models, integrating predictive insights into BI reports.

- Synapse Data Warehouse: Delivers industry-leading SQL performance and scale, with independent compute and storage scaling, and native support for the Delta Lake format.

- Real-Time Intelligence: Extracts and acts on insights from streaming data and event-driven scenarios in real time.

- Power BI: Connect, visualize, and share data insights easily, enabling intuitive decision-making.

- Data Activator: A no-code tool that triggers actions like notifications and workflows based on data patterns.

Serveless Compute

Cloud computing model where the infrastructure is managed and scaled automatically by the provider, ensuring high productivity, optimized memory usage, and reliable performance.

Fabric Engines

There are four compute engines in Fabric that provide the computational power needed for workloads:

- SQL Engine: Powers the Data Warehouse experience and understands T-SQL language.

- Spark Engine: Supports Data Engineering and Data Science experiences, working with Python (PySpark), R (SparkR), Scala, and SQL (SparkSQL) languages.

- KQL Engine: Used by the Real-time Analytics experience and works with KQL language

- Analysis Services Engine: Used by the Power BI experience and operates with DAX and Power Query languages.

Universal security model

Based on Azure AD, ensures comprehensive protection and precise access control across all Fabric engines and workspaces.

One Lake: The OneDrive for Data

OneLake solves the problem of data silos by centralizing the organization’s information into a single unified data lake, similar to OneDrive in its ease of access and management. It is designed to serve as the central repository for all analytical data.

It allows for the storage of both structured and unstructured data as it is based on Azure Data Lake Storage Gen2. Data storage is in the Delta Parquet format to ensure compatibility between different analytics engines without duplication, promoting efficiency and reducing redundancy.

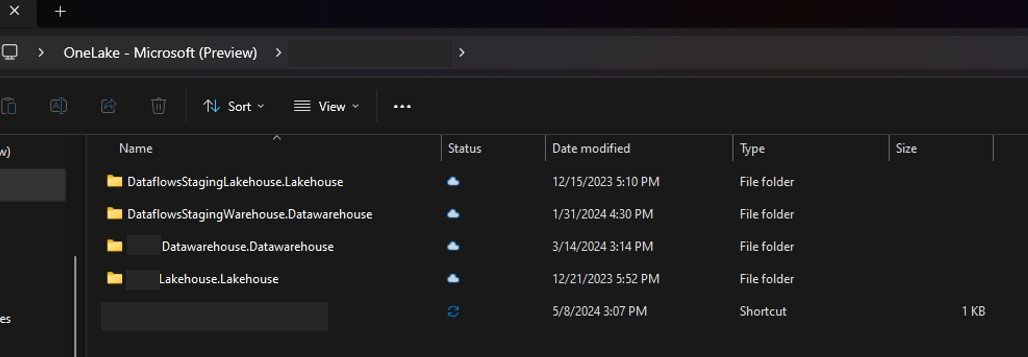

In addition, OneLake facilitates user accessibility by offering an intuitive file explorer for Windows, allowing them to navigate through data via folders and files, just as they interact with files on their own computer:

Shorcuts

Shortcuts in OneLake act as symbolic links to other storage locations, either inside or outside of OneLake, and operate independently of the data they point to, this avoids duplication of data by ensuring that a dataset only exists in one place. They can be created in Lakehouses and KQL databases and point to various data sources such as ADLS Gen2, Amazon S3, or Dataverse, allowing flexible access to data across multiple cloud environments.

Any service inside or outside of Fabric can access the shortcuts through the OneLake API, allowing Spark, SQL, and Analysis Services to query data through them, facilitating a unified data experience.

Delta Parquet Format

Delta-Parquet is the format in which data is stored in Microsoft Fabric, providing an optimized columnar storage structure and efficient compression capabilities. It’s an open file format that is vendor-independent, allowing data professionals to save time by unifying data formats for analysis.

Also called “Delta tables”, they are the fundamental basis of the OneLake architecture, since having all the ACID guarantees, they allow the handling of scalable data operations ranging from simple reports to deep data dives.

Domain

A domain is a way of logically grouping all the data in an organization that is relevant to an area or field, such as sales, marketing or finance. This structure allows each department to work independently within the same data lake without having to manage separate storage resources, ensuring that everyone can find and use the data they need without any chaos.

Each domain is managed by administrators and contributors who can group multiple workspaces under the domain, providing a management boundary for better control. Data within a domain can be endorsed and certified, highlighting important data and identifying unofficial data to avoid creating data swamps.